I write this to you today from my house, and you probably read this note from your house or apartment. Our lives are disrupted. We cannot go to the movies or restaurants, we cannot get our hair cut, we cannot go to weddings or baby showers, and many of us cannot go to our offices or to visit our customers or partners. We cannot go to conferences, and our kids cannot go to schools.

We are doing all of this for fear that we or people we love will die of this awful illness. We are reading horror stories from Italy of their healthcare system being overwhelmed. As I write this to you I worry that the same thing will happen in Switzerland and elsewhere. I worry for my family.

This disease spread so quickly across the planet because of the ability of humanity to scale its transportation systems to efficiently move anyone from anywhere to anywhere, whether that’s by train, plain, ship, or automobile. This was largely not the case during the Spanish Flu of 1917. We need to practice “social distancing” even more so now than then, because the world is a lot smaller and more social place than it was, thanks to all of this capability.

That same human desire to innovate is what is going to save us now. It started early on in the medical community, who have been our first responders in this crisis. They have worked to identify the genetic sequence of the virus itself, to understand its transmission vectors, and to provide the world with initial advice on how to cope with this threat. Even as early as January, researchers across the globe were attempting to develop a vaccine. In the last few days, researchers have reported four types of immunity response cells to look for as people begin to recover. There are two studies that detail how Malaria medication may both improve recoveries and reduce the virus’ infectiousness.

By dint of necessity, we are virtualizing a great many of our activities. We are all learning how to use WebEx Teams or Microsoft Teams or Zoom or Google Hangouts. We are using FaceTime and other remote collaboration tools like never before. One of my friends is planning to virtualize his Passover Seder, and asked for advice on how to do this with Webex. He dubbed this SederEx. We are planning a virtual baby shower with a cousin. I have encouraged my old Kabuki-West crowd to have a virtual Wednesday night dinner together.

The first uses of the Internet were envisioned by its funders to have been military. That’s why the Advanced Research Project Agency (ARPA) funded the activity. It was clear from those early days and even before then that electronic communication would continue to reshape how we socialize in the world.

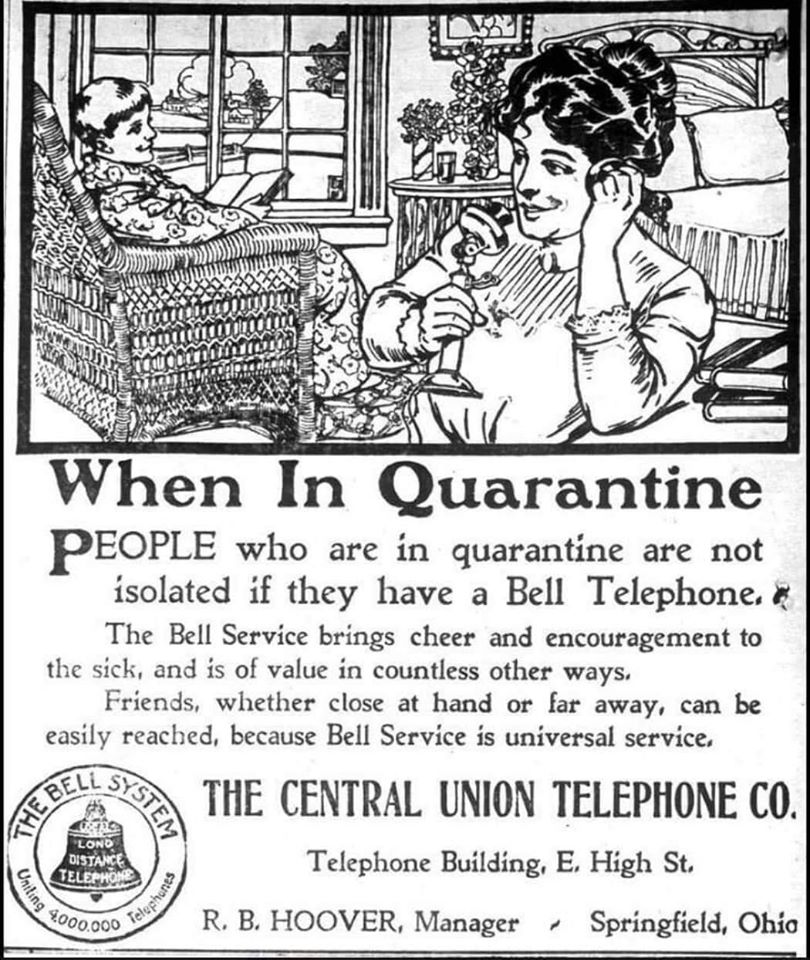

That’s because remote communication didn’t start with the Internet. The invention of the telephone let us “reach out and touch someone”. And that worked great for one-on-one communications. EMail gave us the ability to communicate in near real time with those around us. Instant messaging meant that people could hold several disconnected real time text conversations at once.

Today, however, we can all see each other, present to each other for work, not only hear but also see people’s reactions. In the face of this plague, people are having virtual baby showers, virtual drinks, and even virtual Passover Seders. You have to provide the non-virtual parts yourself, of course, but we are able to still be together, even when circumstances dictate that we be apart.

For those of us who have family who are a great distance away, this also represents a rare opportunity to participate in these sorts of events on an equal footing, without having the phone passed around for brief moments, simply to say hello. We are all in the same boat, this time.

The Internet is helping us remain social, as is in our nature to be. Social networks, which in the last few years could not be spoken of in public without some sort of derision, are a big part of the solution. When all of this is over, we will still need to sift through all of the negativity and nastiness that they engender, but let us give them their due as they help us stay connected to one another, as I am connecting to you today.

While we are not indebted in the same way to Internet engineers as we are to medical first responders and those who have to work through this crisis, like grocery store cashiers and police officers, let us also give Internet engineers a pat on the back for helping people self-isolate physically, without having to self-isolate socially.

And by the way, those medical research results I mentioned earlier are being shared by researchers with other researchers in a very timely fashion thanks to the Internet.

The

The