We are running out of addresses for the current version of the Internet Protocol, IPv4. That protocol allows us to have 2^32 devices (about 4 billion systems minus the overhead used to aggregate devices into networks) connected to the network simultaneously, plus whatever other systems are connected via network address translators (NATs). In practical terms it means that the United States, Europe, and certain other countries have been able to all but saturate their markets with the Internet while developing countries have been left out in the cold.

Long ago we recognized that we would eventually run out of IP addresses. The Internet Engineering Task Force (IETF) began discussing this problem as far back as 1990. The results of those discussions was a standardization that brought us IP version 6. IPv6 quadrupled the address size so that there is for all practical purposes an infinite amount of space. The problem is IPv6’s acceptance remains very low.

While IPv6 is deployed in Japan, Korea, and China, its acceptance in the U.S., Europe, and elsewhere has been very poor. It is not the perfect standard. ALL it does is create a larger address space. It does not fix routing scalability problems and it does not make our networks more secure. No packet format would fix either of those problems.

One of the reasons that IPv6 is not well accepted is that it requires an upgrade to the infrastructure. Anything that uses an IPv4 address must be taught to use an IPv6 address. That is an expensive proposition. IP addresses exist not only in the computer you’re using right now, but in the router that connects your computer, perhaps in your iPhone (if you are a Believer), in power distribution systems, medical systems, your DMV, and in military systems, just to name a few. Changing all of that is a pain.

Back around 1990, I had posited a different approach. Within IPv4 there is an address block 240.0.0.0/4 (16 /8 blocks). What if one could continue to use normal IPv4 address space, but when needed, if the first four bytes of the IPv4 address space contained addresses from that reserved block, one would read the next four bytes as address as well? View that block, if you will, as an area code, and everyone would have one. That would mean that you would only need it if you were contacting someone not in your area code. It would also mean that eventually we would have increased the address space by the size of a factor of 2^28. That’s a big number, and it probably would have sufficed.

Even after these addresses became prevelant, since devices would only need to use them if they were communicating outside their area code, it would mean they could be upgraded at a much slower pace.

The problem that people had with the idea the time was that the cost to implement this version of variable length addressing would have been high from a performance factor. Today, routers used fixed length addresses and can parse them very quickly because of that. But today that is only because they have been optomized for today’s world. It might have been possible to optomized for this alternate reality, had it come to pass.

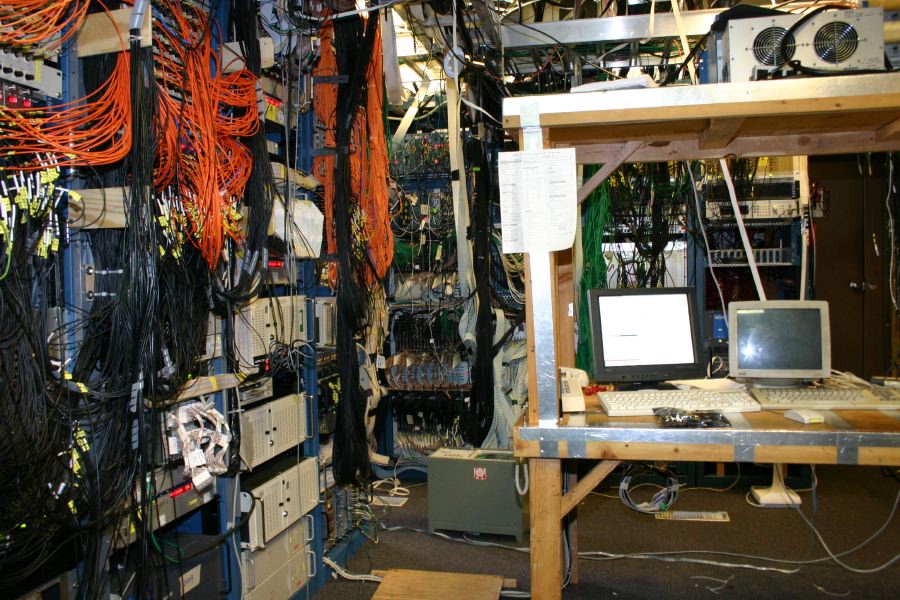

In the summer of 2004 I gave an invited talk at the USENIX Technical Symposium entitled “How Do I Manage All Of This?” It was a plea to the academics that they ease off of new features and figure out how to manage old ones. Just about anything can be managed if you spend enough time. But if you have enough of those things you won’t have enough time. It’s a simple care and feeding argument. When you have enough pets you need to be efficient about both. Computers, applications, and people all require care and feeding. The more care and feeding, the more chance for a mistake. And that mistake can be costly. According to one Yankee Group study in 2003, between thirty and fifty percent of all outages are due to configuration errors. When asked by a reporter what I believed the answer was to dealing with complexity in the network, I replyed simply, “Don’t introduce complexity in the first place.”

In the summer of 2004 I gave an invited talk at the USENIX Technical Symposium entitled “How Do I Manage All Of This?” It was a plea to the academics that they ease off of new features and figure out how to manage old ones. Just about anything can be managed if you spend enough time. But if you have enough of those things you won’t have enough time. It’s a simple care and feeding argument. When you have enough pets you need to be efficient about both. Computers, applications, and people all require care and feeding. The more care and feeding, the more chance for a mistake. And that mistake can be costly. According to one Yankee Group study in 2003, between thirty and fifty percent of all outages are due to configuration errors. When asked by a reporter what I believed the answer was to dealing with complexity in the network, I replyed simply, “Don’t introduce complexity in the first place.”